Windows Server 2012 SMB 3.0 File Shares: An Overview

Today I’ll describe why and how Microsoft has made it possible to store Windows Server 2012 Hyper-V virtual machines on SMB 3.0 file shares, giving you increased performance, scalability, and continuous availability at a fraction of the cost of traditional block storage.

Why Microsoft Created SMB 3.0 for Application Data

The Server Message Block (SMB) protocol is the access protocol for file shares. It’s actually quite an old protocol that was originally designed and used over the years for providing access to information worker (IW) roles such as file shares. Microsoft decided to upgrade SMB from being just an IW protocol to a protocol that would provide file-based access to application data.

Why would Microsoft decide to tackle the block-based storage giants such as iSCSI and fiber channel? There are many reasons to do this.

- Data growth: While we are tackling server sprawl with concepts such as Software-as-a-Service and virtualization, the amount of data that we are retaining is expanding greatly. This is because we are generating more data and retaining more of it. It is unfeasible to continuing to use traditional block storage for this data because it cannot scale as required.

- Expense: Small and large enterprises both find block storage to be too expensive. The majority of this cost is in the Storage Area Network rather than the disks. Do we really need to store any or all of our data on these feature rich block storage platforms? Service providers need to be competitive and block storage is a huge cost center that greatly impacts the costs of products that they try to sell.

- Vendor lock-in: It is very hard to change vendor once you invest in a storage platform. Maybe prices increase. Maybe the vendor’s strategy changes. It will be impossible to mix trays of disks from different vendors in a SAN. Migrating from one vendor to another is very difficult.

- Cloud computing: The term “software defined” is getting a lot of press at the moment. This is because a layer of abstraction makes for an easy-to-deploy and flexible solution. Block storage is hardware defined and dependent on special tools. There are protocols such as SMI-S to try to hide this, but there is still a layer of specialization in the vendors’ implementations of SMI-S that prevents complete abstraction.

Microsoft announced SMB 2.2 during the preview of Windows Server “8” (aka Windows Server 2012). This would be a new version of SMB designed to continue the IW data access role but optimized to provide application data access for roles such as Hyper-V, SQL Server, and IIS, to a new storage platform via old and new media types. Eventually Windows Server “8” became Windows Server 2012, and SMB 2.2 was rebranded as SMB 3.0 to reflect the importance of SMB in the data center.

Introducing SMB 3.0 for Application Data

Any skeptic who hears that you can store production databases or virtual machines on a file share will laugh. That will be because he or she does not understand what Microsoft has accomplished. This is a layered solution; we will describe those layers in the rest of this article.

Storage Spaces

Storage Spaces first received attention as a new feature in Windows 8. Yes, it is there, but Storage Spaces benefits are best seen in Windows Server 2012. Storage Spaces allows you to aggregate individual non-RAID disks (there must be no hardware RAID) and slice them up into multiple logical units (LUNs or LUs) known as virtual disks.

We should be clear: This is not Windows software RAID of the past. Windows RAID was a dreadful feature that was only useful for generating tricky questions in certification exams. Storage Spaces is something that SAN administrators will recognize pretty quickly, because this new storage aggregation and fault tolerance feature works very similarly to disks in a modern SAN.

A storage space allows you to group many disks into a single administrative unit. These could be just a bunch of USB drives in the back of a PC, or they could be just a bunch of disks (JBOD), a tray of disks with no hardware RAID that is attached to the back of a server with a SAS connection. Such a tray can cost just a few thousand dollars. Just like with a RAID array, you can define hot spare disks in a storage space that will be engaged should an active disk be lost.

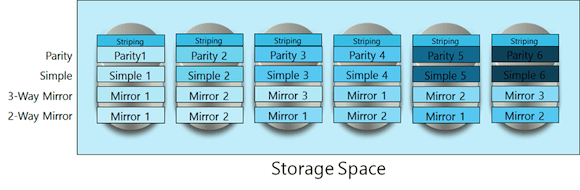

You create virtual disks from the storage space. Each virtual disk is spread across the disks in the storage space. How this is done depends on the type of virtual disk you create.

- Simple: There is no disk fault tolerance. Losing a disk in the storage space will cause you to lose the virtual disk. It has no read or write penalty.

- 2-way mirror: Data is interleaved across two disks at a time. It is like RAID10, but it is not RAID10. There is no read or write performance penalty, but you do use twice the disk space. You can lose a disk with no penalty.

- 3-way mirror: This is like a two-way mirror, but data is interleaved across three disks at a time. This means each slab of data is stored on three disks instead of one or two disks.

- Parity: This is somewhat like RAID5 in concept, but it is not RAID5. Parity is used to provide fault tolerance in the case that a disk is lost. Parity gives you the best storage capacity with disk fault tolerance. As with RAID5, there is a significant write performance penalty for choosing Parity.

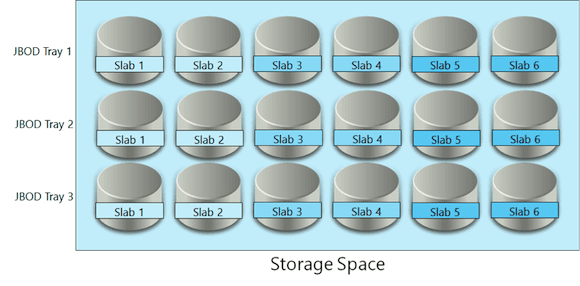

Figure 1: A Storage Space with multiple virtual disks

The role of Storage Spaces by itself is not to replace a SAN. Its role is to replace hardware RAID and to make it possible to use any certified storage system for reliable storage. This extends beyond just a single JBOD tray. A storage space can include two or three JBOD trays. For example, a server can be connected to three JBOD trays and use virtual disks with 3-way mirroring. Instead of the simple example that is illustrated above, the data slabs would be spread across each tray. This means that an entire JBOD tray could be lost and the storage space and the mirror virtual disks would remain operational.

Figure 2: A Storage Space with 3-way mirroring across three JBOD trays

Note that this scenario does not work with 2-way mirrors across two JBOD trays. This is because there is no quorum.

Microsoft learned a lot by observing the established block storage industry. They could implement some nice features by using a software-based solution that did not have to remain backwards compatible.

- Any virtual disk that you create can be thin provisioned. This means that you can oversubscribe storage. For example, you can create a 64 TB LUN when you only have 24 TB of disk. It is up to you (and your monitoring system to warn you) to supply enough physical disk to live up to that commitment.

- You can efficiently use different sized disks in a storage space. Adding a 4 TB disk in a RAID array made up of 2 TB disks is expensive. This is because you can only use half of the 4 TB disk. You can add disks of different sizes into your storage space. Larger disks will be used; the storage space will store more data on those disks.

SMB 3.0

While SMB 2.x was optimized for IW data access over 1 Gbps networking, SMB 3.0 was designed to provide application data access. Microsoft had to compete with 1 GbE and 10 GbE iSCSI as well as 4 Gbps and 8 Gpbs fiber channel. SMB 3.0 not only competes with those protocols, but it also can completely demolish them in head-to-head speed tests.

SMB Multichannel

The first requirement for an application data access protocol is to be resilient. Multipath IO (MPIO) is used by block storage to aggregate two or more storage paths and to provide seamless fault tolerance if one of those paths (a NIC/HBA, a switch, or a controller) fails. Each MPIO solution is storage vendor specific and usually requires configuration.

SMB 3.0 includes SMB Multichannel. SMB Multichannel provides several features that work “out of the box” with absolutely no configuration:

- Multiple parallel streams: If the NIC that is being used for storage networking supports Receive Side Scaling (RSS) then SMB Multichannel will send multiple parallel paths of data across that NIC. This allows SMB to make full usage of available bandwidth in a single NIC, such as 10 Gbps or faster.

- Multiple paths: An SMB client will query the SMB server to see if there are multiple paths from the client to the server for SMB data transfer. If there are, then SMB multichannel will use them after passing a threshold. This allows SMB 3.0 to detect multiple networking paths. NICs can be added and removed with no loss in connection.

SMB Multichannel effectively gives SMB a dynamic and configuration free MPIO-like experience. There are only two configurations available:

- Turn SMB Multichannel off: for diagnostics

- Constraints: Restrict SMB 3.0 traffic to specified NICs to use a dedicated storage network

In short, if you have 2 * 10 GbE NICs with RSS enabled, then you can stream SMB 3.0 data at 20 Gbps. But that is only the start of the story!

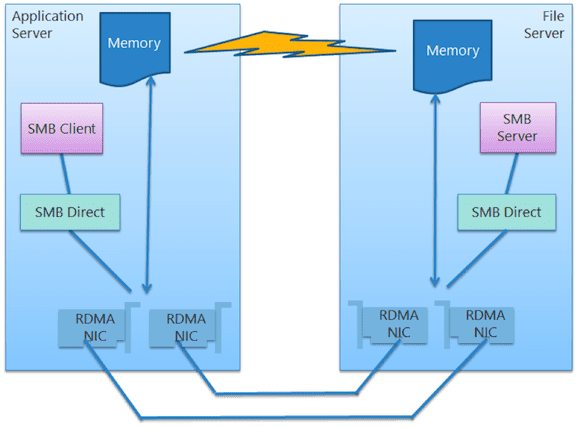

SMB Direct

The benefits of fiber channel storage connectivity include:

- Low latency: data travels from the client (application server) to the server (storage controller) very quickly

- High throughput: 8 Gbps fiber channel is available – but a pair of 10 GbE NICs can beat that bandwidth

- Low impact: storage transfer processing is offloaded to the HBA and causes very little impact to the application server’s processor(s)

Microsoft looked to a protocol called Remote Direct Memory Access (RDMA) to create SMB Direct. RDMA is a protocol that transfers data directly from the memory of a client to the memory of a server using a non-TCP/IP connection (it starts with the usual SMB 3.0 handshakes over TCP/IP). This means that SMB 3.0 can do the same things as fiber channel (low latency and low impact) using NICs that support RDMA:

- iWARP: a form of 10 GbE NIC

- ROCE: running at 10 Gbps or 40 Gbps

- Infiniband: currently available at 56 Gbps but there are whispers of 100 Gbps on the way

While SMB Direct is not a requirement for using SMB 3.0 for application data (Hyper-V and so on), it does pack quite a punch for huge workloads.

Figure 3: SMB Direct with SMB Multichannel

How Fast Is SMB 3.0?

At MMS 2013, Microsoft did a live comparison of iSCSI and SMB 3.0 over the same networking (1 GBE) with the same back end disks. SMB 3.0 slightly out-performed iSCSI. At TechEd North America 2012, Microsoft and a partner demonstrated 16 Gigabytes per second transfer rates (not Gbps). That’s four DVDs per second! And at TechEd Europe 2012, a live demonstration showed a Hyper-V virtual machine achieve over 1.2 million input/output operations per second (IOPS). SMB 3.0 is so fast that spinning disks (HDD) will probably become your bottleneck once you get over 10 Gbps networking. That’s a nice problem to have!

What Does All This Mean to Hyper-V?

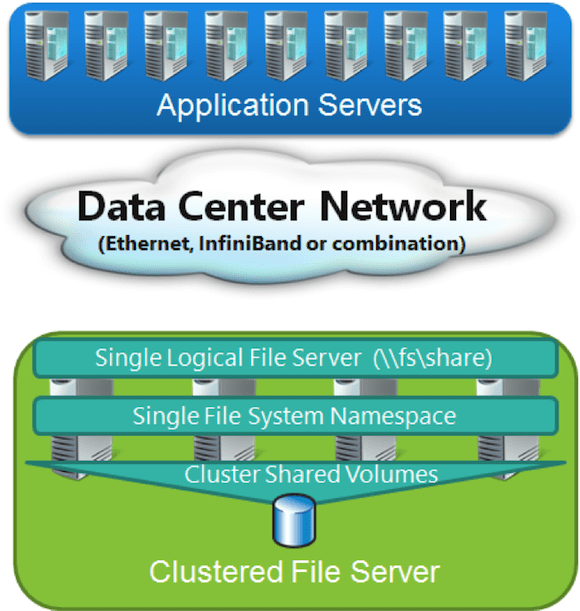

At this point we have enough technology to build a very economic and highly performing storage system. Windows Server 2012 Hyper-V supports using SMB 3.0 to store virtual machines on Windows Server 2012 file servers.

- Non-clustered hosts can store virtual machines on a file share. These non-clustered hosts can even use Live Migration to transfer virtual machines from one host to another without moving their files, assuming that permissions and Kerberos Constrained Delegation have been correctly configured.

- You can build supported Windows Server 2012 Hyper-V clusters using SMB 3.0 shares instead of traditional block storage SANs.

Figure 4: Using a Windows Server 2012 file server instead of block storage

The storage on the file server can be scaled out using Storage Spaces with economic JBODs instead of more expensive RAID-enabled direct attached storage (DAS).

Scalable (and Continuously Available) Storage

A details0-oriented person will have noticed a flaw with replacing a SAN with a single file server. A SAN has two or more controllers with dual-path networking. A file server is a single point of failure – your virtual machines (or even IIS websites or SQL Server) won’t react well if that file server has a blue screen of death.

The answer is to cluster the file server. However, the traditional file server for user data was active/passive; it does not scale out like a SAN (by adding controllers). The active/passive cluster also has a significant outage window while a file share fails over from one cluster node to another. The end user might be fine with that for 30 seconds, but Hyper-V will not tolerate that length of an outage.

This is why Microsoft created the file server for application data known as the Scale-Out File Server (SOFS). The SOFS is a special active/active clustered file server role that runs on every node in the file server cluster. The cluster uses some kind of shared storage on the back end. This can be:

- Leverage investment in existing traditional SAN: Maybe you have run out of SAN switch ports and want to use SMB 3.0 to share disk capacity with more application servers (Hyper-V hosts)? Or perhaps you want to leverage the easy and abstracted provisioning of file shares a layer above the vendor specific block storage in your cloud?

- JBOD with Storage Spaces: A single JBOD with support for multiple server connections (with dual path SAS disks) is used as a SAN alternative. Storage spaces is used to create simple or mirrored (parity is not supported in this configuration) virtual disks that are shared by all the nodes in the file server cluster. Additional JBODs can be added for 2-way and 3-way mirroring.

Note: There are some common misconceptions here. The JBODs are shared by the servers, just as a SAN would be but at a tiny fraction of the cost. There is no JBOD-to-JBOD replication, and you cannot do internal disk to internal disk replication.

You can start with two nodes in the file server cluster and grow it up to eight nodes, adding memory (cache) and network capacity.

Figure 5: The Scale-Out File Server

The volumes of the backend storage are made active/active by using Cluster Shared Volumes (CSV is supported in this role). The SOFS role is created and enabled on the cluster. It is active/active and uses the client access IP addresses of the cluster nodes. The SOFS role also creates a computer account in Active Directory, for example, MyDomain\FS. A share is created for the SOFS role. The share is stored once on the CSV (and is therefore available to all of the file server cluster nodes) and is shared by all of the cluster nodes at once, using the SOFS computer account (for example, \\FS\Share).

Clients use client-based round-robin in conjunction with a DNS lookup for the SOFS computer account to connect to the share. A very elegant SMB witness process intervenes in the case of a clustered file server outage. The affected client servers (Hyper-V hosts) pause IO for their applications (virtual machines) and are redirected to other nodes in the cluster without causing applications (virtual machines) to have any problems beyond a brief increase in storage latency.

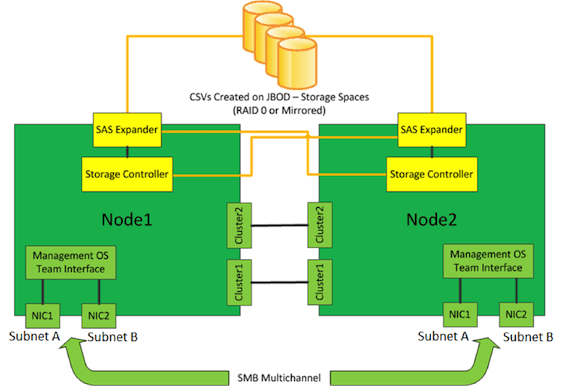

Cluster-in-a-Box (CiB)

A new form of pre-built cluster has started to come onto the market in recent times called a cluster-in-a-box (CiB). The CiB is a chassis that contains (usually) two blade servers, with their own independent power and networking, and a JBOD that supports Windows Server 2012 shared Storage Spaces.

Figure 6: Illustration of a Cluster-in-a-Box

The primary purpose of the CiB is to provide a SOFS in a box, possibly even configured by a vendor-supplied wizard. This could provide businesses with a modular SOFS deployment. However, when you inspect the CiB you will find two or more servers with expandable processor and memory capacity. You’ll also find shared storage that is supported by clustering. That means that a single CiB chassis by itself (with possible processor/RAM upgrades) is enough to build a 2-node Hyper-V cluster for the branch office or small/medium enterprise without any other servers (Windows Server 2012 clusters don’t require physical domain controllers) or storage.

Summary

Windows Server 2012 has changed storage in the data center (or cloud) forever. File-based storage, specifically SMB 3.0 file shares and Storage Spaces, can offer almost all of the features of a SAN and beat the performance of a SAN at a fraction of the cost. There are valid reasons to favor placing some data on a SAN, including LUN replication for disaster recovery and tiered storage.

Ask yourself this when you come up with those reasons: Does all of your data need these features to justify buying all of that SAN disk? Could 80% or more of your data happily sit on Windows Server 2012 storage and provide better performance for your business?

The skills required to deploy SMB 3.0 storage are Windows Server clustering and the ability to create file shares and assign permissions. These are common skills in the data center and they are easy to automate on any hardware. These reasons, in addition to the superior performance of SMB 3.0, make Windows Server 2012 file storage a strong competitor in the data center and the cloud.

Related Article: